Introduction

Most SaaS teams want “AI features” and “better analytics” at the same time.

Then reality hits. Your product events are inconsistent, your metrics disagree across tools, and the first model you ship can’t be reproduced a week later.

This article is about designing the data layer so those problems don’t keep coming back. We’ll cover:

- Event tracking that does not collapse under product changes

- A feature store that keeps training and inference aligned

- Analytics that answers real questions (not vanity dashboards)

- A pragmatic boilerplate starter you can adapt, not worship

Insight: If you can’t explain how a number was produced, you don’t have analytics. You have decoration.

What “AI ready” actually means (in practice)

AI ready is not a badge. It’s a set of boring guarantees:

- You can reconstruct a user journey from raw events

- You can replay a metric and get the same result

- You can train and serve features from the same definitions

- You can control access to sensitive fields without hand edited queries

If you can do those four things, adding AI becomes engineering work, not archaeology.

A quick note on examples

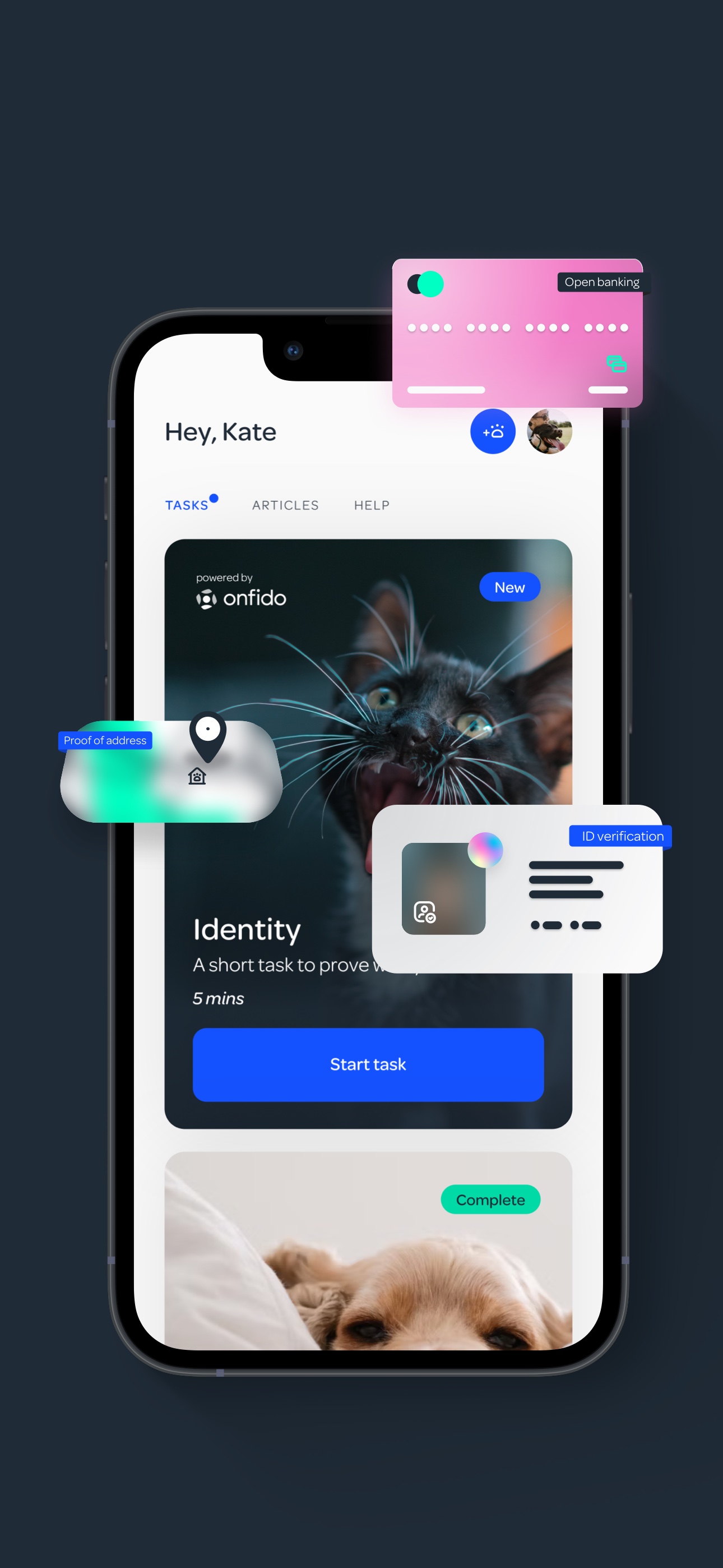

We’ll reference patterns we’ve used across Apptension delivery work, including commerce and identity heavy products like blkbx and PetProov. The details differ by product, but the data layer problems rhyme: attribution, trust, fraud signals, and fast iteration without breaking reporting.

Boilerplate starter checklist

A minimal repo structure that stays understandable

events/event specs (name, version, fields, examples)instrumentation/client and server emittersingestion/validation, quarantine, routingwarehouse/raw tables, modeled tables, testsmetrics/metric definitions and unit testsfeatures/feature definitions, offline and online materializationgovernance/field classification, access rules, audit hooks

What to keep out at first:

- Custom dashboards for every stakeholder

- Dozens of derived tables with unclear owners

- One off features that nobody will maintain

The challenge: SaaS data breaks in predictable ways

You usually don’t “lack data”. You lack data you can trust.

Here are failure modes we see most often:

- Events change names or payloads without versioning

- Frontend tracking drifts from backend reality

- Teams define the same metric three ways (and argue in Slack)

- Models train on one snapshot and infer on another

- PII leaks into places it should not exist

Key Stat: 76% of consumers get frustrated when organizations fail to deliver personalized interactions.

Personalization is downstream of measurement. If your measurement is shaky, your personalization will be too.

Why event tracking goes wrong

Event tracking fails for boring reasons:

- Product ships fast

- Tracking is “just add a call” work

- Nobody owns the schema

Common symptoms:

- “signup” means account created in one tool and email verified in another

- A funnel drop is actually a tracking gap

- A marketing campaign looks like it worked because attribution is wrong

Why analytics and AI collide

Analytics wants stable definitions. AI wants stable features. Both rely on stable event semantics.

When those semantics drift, you get:

- Models that look great offline and fail in production

- Experiments you cannot interpret

- Teams that stop trusting dashboards

Insight: The fastest way to kill an AI roadmap is to ship a model that can’t be reproduced and can’t be explained.

benefits component: what a solid data layer buys you

- Fewer metric debates because definitions live in code

- Faster experiments because event changes are versioned

- Safer compliance posture because PII handling is explicit

- Better model performance over time because features don’t drift silently

- Lower onboarding cost for new engineers and analysts

_> What to track to know your data layer is working

If you cannot measure these, you are guessing

Event delivery success

Accepted events versus emitted events

Freshness for core metrics

From event to warehouse table

Schema violation rate

Events quarantined for review

A reference architecture for an AI ready SaaS data layer

You don’t need a perfect enterprise stack. You need clear boundaries.

Contracts and five layers

Events are product API

A pragmatic stack has clear boundaries: instrumentation → ingestion → warehouse or lakehouse → semantic layer → feature layer. The fastest win is a minimum event contract you actually enforce:

- Stable name + integer version

- Event time and ingestion time

- Actor IDs (user, workspace, device)

- Context (app version, platform, locale)

- Typed payload with optional fields

Mitigation when teams ship fast: add an event schema registry, edge validation (reject or quarantine bad events), and raw immutable storage for replay and audits. In our delivery work on commerce and identity heavy products (for example blkbx, PetProov), this is where attribution and trust signals stop drifting. Measure: end to end latency, delivery success rate, schema validation failure rate, and feature freshness.

At a high level, a pragmatic architecture has five layers:

- Instrumentation layer (client and server events)

- Ingestion layer (stream or batch)

- Warehouse or lakehouse (raw and modeled tables)

- Semantic layer (metrics and dimensions)

- Feature layer (feature store for training and serving)

Insight: Treat events as product API. Version them like product API.

The minimum set of data contracts

If you do only one thing, do this: define a contract for events and enforce it.

A practical contract includes:

- Event name (stable)

- Event version (integer)

- Timestamp (event time and ingestion time)

- Actor (user id, workspace id, device id)

- Context (app version, platform, locale)

- Payload (typed fields, optional fields)

featuresGrid component: core building blocks

- Event schema registry: One place to define and review event contracts

- Validation at the edge: Reject or quarantine malformed events early

- Raw immutable storage: Keep original events for replay and audits

- Modeled tables: Build clean, queryable facts and dimensions

- Metric definitions as code: One source of truth for KPIs

- Feature definitions as code: Same logic for training and inference

A quick comparison: where each tool fits

| Layer | What it does | What breaks if you skip it | What to measure |

|---|---|---|---|

| Instrumentation | Emits consistent events | Funnels lie | Event coverage rate, schema error rate |

| Ingestion | Moves events reliably | Data loss, lag | Delivery success rate, end to end latency |

| Warehouse | Stores and models data | No history, no joins | Freshness, query cost, table growth |

| Semantic layer | Defines metrics | Metric drift | Metric consistency checks |

| Feature store | Serves features consistently | Training serving skew | Feature freshness, drift metrics |

Where regulated and enterprise constraints show up

From our enterprise architecture work, the constraints are usually not about storage. They’re about control:

- Zero trust access: assume every system and user is untrusted by default

- Data minimization: store only what you need, for as long as you need

- Auditability: show who accessed what and why

If you plan for those early, you avoid painful retrofits later.

Failure modes to plan for

These show up even in strong teams

- Backfills take too long: design partitioning and incremental models early

- Identity stitching is messy: document rules for anonymous to known users

- Events arrive late: use event time and watermarks, not ingestion time

- Teams bypass the schema: enforce validation in CI and ingestion

- Costs creep up: measure query spend and table growth from day one

Event tracking that survives product change

The goal is not “track everything”. The goal is to track the things you can act on.

Predictable data failures

What breaks first

Most SaaS teams do not “lack data.” They lack data they can trust. Common failure modes:

- Events renamed or payloads changed without versioning.

- Frontend tracking drifts from backend reality (funnels lie).

- One metric gets defined three ways (arguments replace decisions).

- Models train on one snapshot and infer on another.

- PII leaks into places it should not exist.

The downstream cost shows up as failed personalization. The article cites 76% of consumers get frustrated when personalization misses. Track leading indicators: schema error rate, event coverage rate, and metric consistency checks across tools.

Start with questions, not events

Before you add an event, write the question it answers.

Examples:

- Where do users abandon onboarding?

- Which features correlate with week 4 retention?

- Which checkout steps drive support tickets?

Then map each question to:

- A metric definition

- A set of required events

- Ownership (who maintains it)

processSteps component: a practical instrumentation workflow

- Write the event spec in a shared repo (name, version, fields, examples)

- Add server side events for source of truth actions (payments, verification, permissions)

- Add client side events only for UX context (clicks, views, errors)

- Validate events at ingestion (schema checks, required fields)

- Backfill and replay when specs change (don’t “just start from today”)

Example: commerce flows and one click checkout

In commerce products like blkbx, the temptation is to track every click. The better approach is to track state transitions.

For a simple checkout, you care about:

- Offer created

- Payment intent created

- Payment confirmed

- Fulfillment started

Everything else is supporting context.

Example: In checkout heavy builds, we’ve had the cleanest analytics when the backend emits the canonical events and the frontend only adds UI context like “step viewed” or “error shown”.

What fails, and how to mitigate it

Common failures:

- Event spam: too many low value events

- Silent schema drift: fields change without review

- Identity mismatch: anonymous and logged in users never get stitched

Mitigations that work:

- Define a short list of “golden events” that power core metrics

- Add a schema linter in CI for tracking code

- Use explicit identity events (identify, alias) and document rules

Code example: an event contract and validation

{

"name": "checkout_payment_confirmed",

"version": 2,

"occurred_at": "2026-01-31T12:34:56Z",

"actor": {

"user_id": "usr_123",

"workspace_id": "wsp_456"

},

"context": {

"app_version": "1.18.0",

"platform": "web"

},

"payload": {

"order_id": "ord_789",

"currency": "USD",

"amount_cents": 12900,

"payment_provider": "stripe"

}

}# Pseudocode validation

required = ["name", "version", "occurred_at", "actor", "context", "payload"]

for k in required:

assert k in event

assert isinstance(event["version"], int)

assert "user_id" in event["actor"] or "device_id" in event["actor"]

Feature stores: the boring bridge between analytics and AI

A feature store is not a magic box. It’s a system for making sure the same feature means the same thing everywhere.

AI ready means guarantees

Four checks before models

AI ready is not “we have data.” It is four boring guarantees:

- Reconstruct journeys from raw events (not screenshots of dashboards).

- Replay metrics and get the same result next week (same inputs, same logic).

- Train and serve from one definition (avoid training serving skew).

- Control access to sensitive fields without hand edited queries (role based access, audited).

If you cannot do these, you will ship a model you cannot reproduce or explain. Measure it: metric replay pass rate, feature parity tests, and PII access audit coverage (hypothesis metrics if you do not have them yet).

If you skip it, you often end up with:

- One set of features in notebooks

- Another set in the app

- A third set in dashboards

That is how training serving skew happens.

Insight: Most “model failures” we debug are data definition failures.

What belongs in a feature store (and what does not)

Good feature store candidates:

- User level aggregates (purchases in last 30 days)

- Workspace health signals (active seats, churn risk proxies)

- Fraud and trust signals (verification status, dispute rate)

Bad candidates:

- Raw clickstream (too granular, too volatile)

- One off experiment features with no plan to maintain

Example: trust signals in identity heavy flows

In PetProov style products, trust is the product. Verification is not a single boolean.

You often need features like:

- Verification step completion rate

- Time to complete onboarding

- Number of failed attempts

- Discrepancy flags between submitted data and checks

Those features power:

- Risk scoring

- Support prioritization

- Product nudges

Example: When we build onboarding and verification flows, we try to define trust features early. It forces clarity on what “verified” actually means in the product.

Comparison table: three ways teams ship features

| Approach | Speed in week 1 | Reliability in month 3 | Who can maintain it | Typical failure |

|---|---|---|---|---|

| Notebook only | High | Low | One person | No reproducibility |

| App code only | Medium | Medium | Engineers | Training serving skew |

| Feature definitions as code | Medium | High | Engineers and data team | Requires discipline |

What to measure (if you want to be honest)

If you claim your feature store is working, measure:

- Feature freshness (p50, p95)

- Training serving skew checks (same input, same output)

- Drift metrics per key feature

- Model performance by segment over time

If you don’t have those numbers yet, treat your confidence as a hypothesis and instrument the checks first.

A simple measurement plan

If you want fewer debates, measure the plumbing

- Event coverage: percentage of key flows emitting all required events

- Metric reconciliation: same KPI across tools should match within a tolerance

- Data latency: p50 and p95 from event to modeled table

- Feature freshness: time since last update per feature

- Drift checks: per feature distribution shift by week and by segment

Conclusion

An AI ready SaaS data layer is not about fancy tools. It’s about repeatable definitions and clear ownership.

If you want a practical starting point, build a small boilerplate that enforces the basics:

- Event contracts with versioning

- Validation and quarantine for bad events

- Raw immutable storage for replay

- Modeled tables for facts and dimensions

- Metrics and features defined as code

Insight: The boilerplate is not the point. The habits are the point.

Next steps you can take this week

- Pick 10 to 20 golden events and write specs for them

- Define 5 to 10 core metrics and implement them in one place

- Add schema validation to your ingestion path

- Identify 3 to 5 features you would need for your first AI use case and define them as code

- Decide who owns each layer (product, engineering, data)

faq component: common questions we hear

Do we need a feature store on day one? Not always. But you do need feature definitions that can be reused. If you can’t reuse them, a feature store later will be painful.

Should we track on the frontend or backend? Both, but for different reasons. Backend for canonical business events. Frontend for UX context.

What’s the fastest way to lose trust in analytics? Let metrics vary by tool. One definition, one place, and tests.

How do we keep PII out of the wrong places? Classify fields early, restrict access by default, and log access. Treat it like an engineering problem, not a policy doc.