Introduction

You do not need a perfect product to learn. You need a working product that can collect signal.

If you are building an AI powered SaaS, the first version usually fails for predictable reasons:

- The scope balloons because AI feels like magic, so everyone wants more

- The data is messier than expected

- Costs and latency are not measured until users complain

- The team spends weeks on plumbing that investors will never ask about

A SaaS boilerplate changes the shape of the work. Instead of starting with auth, billing, roles, admin, emails, and deployment, you start with the part that creates value: the workflow and the AI feature.

Insight: The fastest MVP is not the one with the fewest features. It is the one with the fewest unknowns.

In our work building SaaS products and AI prototypes, including a 13 week journey to an LLM driven prototype in Project LEDA, the pattern is consistent: speed comes from making fewer decisions twice.

This article breaks down a pragmatic way to build an AI powered SaaS MVP faster with Apptension’s SaaS Boilerplate, with a timeline, realistic scope, and best practices. It also calls out what can go wrong and how to catch it early.

Key outcomes you should aim for:

- A usable product in users’ hands within weeks, not quarters

- A validation loop that produces measurable evidence for product market fit

- A fundraising narrative backed by real usage and unit economics, not demos

What we mean by an AI powered SaaS MVP

An AI powered SaaS MVP is not “a chatbot in your app.” It is a product where AI changes a user’s workflow in a measurable way.

A good MVP AI feature has:

- A clear input and output (documents in, structured fields out)

- A measurable success definition (accuracy, time saved, fewer manual steps)

- A fallback path when the model is wrong

If you cannot describe the fallback, you do not have an MVP. You have a demo.

MVP scorecard for AI powered SaaS

A quick gut check before you ship

Use this as a pre launch checklist.

- User value: Can a user complete the core job end to end?

- AI reliability: Do you have a defined success metric and a fallback?

- Observability: Can you answer what happened for any AI run?

- Cost control: Do you have per user limits and a cost per run estimate?

- Support readiness: Do you have admin tools to fix issues without redeploying?

Scope the MVP like you have one month of runway

Early stage founders usually overbuild in two places:

- The product surface area (too many roles, settings, and edge cases)

- The AI system (too much autonomy, too little observability)

The boilerplate helps you avoid the first category. You still need discipline for the second.

The minimum scope that still feels like a product

Start with a scope that can support real users and real payments, even if the AI feature is narrow.

Include:

- Authentication and password reset

- Organization and roles (simple, not enterprise)

- Billing and plans (even if you comp later)

- Basic audit trail for AI actions (who ran what, on which input)

- Admin tools for support (impersonation, feature flags, usage view)

Defer:

- Complex permissions matrices

- Multiple integrations at once

- Fully custom analytics dashboards (start with events and a spreadsheet)

- AI agents that take actions without user review

Insight: If your MVP cannot be supported by one person on a Friday night, it is not an MVP.

A quick comparison: custom build vs boilerplate start

| Area | Custom from scratch | Boilerplate start | What you should measure |

|---|---|---|---|

| Auth and roles | 1 to 2 weeks | 0 to 2 days | Time to first invited teammate |

| Billing | 1 to 3 weeks | 1 to 3 days | Time to first paid plan created |

| Admin and support | Often skipped | Usually included | Time to resolve a user issue |

| AI feature | Competes with plumbing | Becomes the focus | Time to first user success event |

| Deployment | Ad hoc | Standardized | Lead time to deploy, rollback time |

Features grid: MVP scope you can actually ship

- Core workflow: One job to be done, end to end

- AI assist: One model backed capability, with review and correction

- Usage tracking: Events for every key step, including AI cost per run

- Safety rails: Rate limits, content filters where relevant, and fallbacks

- Support tools: Admin view and logs that answer “what happened?”

If you are tempted to add more, ask one question: will this reduce uncertainty this month?

Where AI MVPs usually go off the rails

Common failure modes we see:

- You ship “AI” but do not define success, so you cannot iterate

- You assume the prompt is the product, then discover data quality is the product

- You do not budget for latency, so the UX feels broken

Mitigations that work:

- Define a single success metric for the AI feature (and one guardrail metric)

- Add a human review step until you can prove reliability

- Log every model call with input size, output, latency, and cost

Key Stat: 76% of consumers get frustrated when organizations fail to deliver personalized interactions. Use that as pressure to improve, not an excuse to ship unreliable automation.

What to cut when the scope grows anyway

Because it will

When you feel the MVP slipping, cut in this order:

- Extra roles and permissions

- Secondary integrations

- Custom dashboards (keep raw events)

- Autonomous AI actions (keep assist and review)

- Multi tenant complexity beyond basic organizations

Keep:

- The core workflow

- Instrumentation

- Billing scaffolding

- The correction loop

A realistic timeline: from idea to investor ready MVP

Founders often ask for “a 2 week MVP.” Sometimes you can ship something in 2 weeks. But if you want something that survives real usage, plan in phases.

8 week build plan

Manual first, then guardrails

Use phases so you do not discover costs and failure modes after launch:

- Phase 0 (1 week): pick one ICP and use case, define AI success and failure, decide what data you will and will not store. Deliver: 1 page spec, metrics plan, risk list (privacy, cost, accuracy, abuse).

- Phase 1 (weeks 1 to 2): ship the core workflow without AI (manual first). Add UI for results and corrections, data model, audit trail, billing plans.

- Phase 2 (weeks 3 to 5): treat AI like a system: wrapper with retries and timeouts, logging, a 50 example eval set, review loop, cost limits per user.

- Phase 3 (weeks 6 to 8): validation: 10 to 30 interviews with the product open. Measure activation, time to value, AI success rate, 7 day repeat usage, and model cost per active user.

Context: in Project LEDA (13 weeks to an LLM driven prototype), speed came from tight feedback loops and evaluation. Without that, teams drift.

Below is a timeline we have seen work when teams use a SaaS boilerplate to remove setup work.

Phase 0: one week of decisions that save a month later

Do this before you write feature tickets.

- Choose one ICP and one use case

- Write the first onboarding flow on paper

- Define AI success and failure

- Decide what data you will store and what you will not store

- Pick your first pricing hypothesis

Deliverables:

- A one page product spec

- A metrics plan (events and dashboards)

- A risk list (privacy, cost, accuracy, abuse)

Phase 1: weeks 1 to 2, get to a usable skeleton

Using a boilerplate, you start with a product shell that already has the essentials.

Build:

- The core workflow without AI (manual first)

- The UI that will host AI results and user corrections

- The data model and audit trail

- Billing plans, even if you do not charge yet

Insight: Manual first is not a compromise. It is a calibration tool.

Phase 2: weeks 3 to 5, add the AI feature with guardrails

Now you add the AI capability, but you treat it like a system, not a prompt.

Build:

- Model call wrapper with retries, timeouts, and logging

- Evaluation harness with a small test set (even 50 examples helps)

- A review and correction loop that feeds your backlog

- Cost controls (limits per user, caching where possible)

Phase 3: weeks 6 to 8, validation and proof

This is where you earn the right to talk about traction.

Run:

- 10 to 30 user interviews with the product open

- A weekly iteration cadence (ship, measure, adjust)

- A pricing test (even if it is “book a call to upgrade”)

Measure:

- Activation rate (first meaningful action)

- AI success rate (your definition)

- Time to value

- Retention proxy (repeat usage within 7 days)

- Gross margin proxy (model cost per active user)

Example: In Project LEDA, we documented a 13 week journey from concept to prototype for an LLM driven analysis experience. The lesson was not “LLMs are fast.” The lesson was that you need a tight feedback loop and clear evaluation, or you drift.

Process steps: what to ship each week

- Week 1: onboarding, core workflow, events

- Week 2: billing, admin tools, first internal dogfood

- Week 3: AI v1 behind a feature flag, logs and costs

- Week 4: evaluation set, review UI, fallback paths

- Week 5: performance and latency work, first external users

- Week 6 to 8: iterate on the one workflow that drives retention

What investors actually care about at MVP stage

Investors rarely dig into your stack choices. They do care about evidence.

Bring:

- A clear wedge: one user, one pain, one workflow

- A chart: weekly active users or usage per account

- A unit economics sketch: model cost, infra cost, gross margin hypothesis

- A pipeline view: how you get users, even if it is manual

If you do not have numbers yet, say it plainly. Then propose what you will measure next week.

Hypotheses worth testing fast:

- Users will pay for speed, not for “AI”

- Accuracy threshold for trust is higher than you think

- The correction loop will be your moat if you capture it

_> Delivery timelines from real builds

Use these as reference points, not promises

Miraflora Wagyu delivery

Custom Shopify experience shipped fast

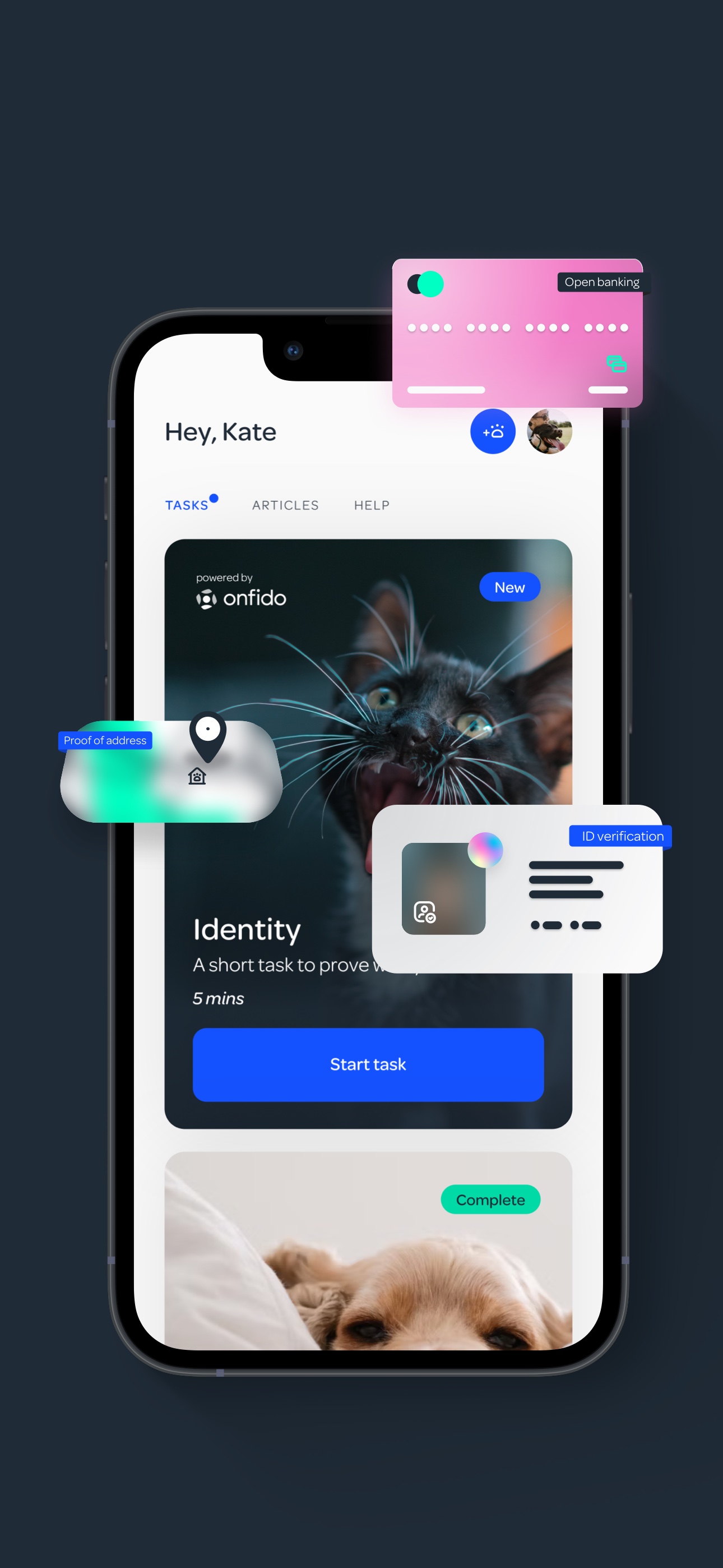

PetProov delivery

Secure mobile <a href="/case-study/platform">platform</a> with verification flows

Project LEDA prototype journey

LLM driven analysis concept to prototype

Best practices for an AI powered SaaS MVP that does not collapse

Boilerplates help with speed. They do not protect you from AI specific risks. Here are practices we use to keep MVPs shippable.

Boilerplate vs scratch

What to measure weekly

A boilerplate only matters if it changes your lead time. Track the deltas:

- Auth and roles: measure time to first invited teammate (scratch: 1 to 2 weeks; boilerplate: 0 to 2 days)

- Billing: measure time to first paid plan created (scratch: 1 to 3 weeks; boilerplate: 1 to 3 days)

- Admin and support: measure time to resolve a user issue (often skipped when custom)

- AI feature: measure time to first user success event (plumbing competes with this when you start from scratch)

- Deployment: measure lead time to deploy and rollback time

If these numbers do not improve, the boilerplate is not your bottleneck. Your scope is.

1) Treat AI like a dependency with SLOs

Define targets early. Even rough ones.

- Latency target (p95)

- Error rate target

- Cost per run target

- Quality target (your metric)

Insight: If you cannot afford to run the model at MVP scale, you cannot afford to sell the product.

2) Build a correction loop from day one

Users will forgive wrong outputs if they can fix them quickly.

Include:

- Inline edit

- “This is wrong” feedback with a reason

- A way to attach ground truth (file, link, note)

Track:

- Correction rate

- Time to correct

- Top failure categories

3) Log everything you will need for debugging and fundraising

At minimum:

- Prompt version and model version

- Input size and output size

- Latency and cost

- User action taken after the result

If you work in regulated industries, you may need stricter controls. In our Generative AI Solutions work, the constraint is usually not model choice. It is governance: what you store, how you audit, and how you explain outcomes.

4) Keep the architecture boring until it hurts

Early stage teams reach for microservices too soon. Do not.

Start with:

- A modular monolith

- Clear boundaries in code

- Background jobs for model calls if needed

Move later when:

- Deploys slow you down

- One part of the system needs separate scaling

Benefits: what you get if you follow the boring path

- Faster iteration because the system is easier to reason about

- Lower ops load with a small team

- Cleaner investor story because metrics are consistent

A small, practical code pattern: model call wrapper

type AiCallResult = {

ok: boolean

data ? : T

error ? : string

latencyMs: number

costUsd ? : number

model: string

promptVersion: string

}

async function callModel(params: {

model: string

promptVersion: string

input: unknown

timeoutMs: number

}): Promise > {

const start = Date.now()

try {

// 1) serialize input

// 2) call provider with timeout

// 3) parse output

// 4) compute cost estimate

return {

ok: true,

data: {}

as T,

latencyMs: Date.now() - start,

costUsd: undefined,

model: params.model,

promptVersion: params.promptVersion,

}

} catch (e: any) {

return {

ok: false,

error: e?.message ?? "unknown error",

latencyMs: Date.now() - start,

model: params.model,

promptVersion: params.promptVersion,

}

}

}This is not fancy. That is the point. It gives you consistent logs, consistent failures, and a place to add safeguards later.

Security and trust: do not bolt it on

If your product touches identity, payments, or regulated data, you need to plan for trust early.

A useful reference point is the kind of work we did with PetProov, where identity verification and secure flows were core to the product. Even when the timeline is longer (their platform was built in 6 months), the principle holds for MVPs: design the trust boundary first.

Practical MVP steps:

- Store the minimum sensitive data

- Encrypt secrets properly and rotate keys

- Add rate limits to AI endpoints

- Create an audit log that is readable by humans

If you are building mobile, crypto and key handling can get weird fast. We have shared lessons from implementing custom cryptographic systems in React Native, and the main takeaway is simple: test strategy is part of the security design, not an afterthought.

Validation metrics to track in the first 30 days

Pick a small set and review weekly.

- Activation rate: percent of signups reaching the first meaningful action

- Time to value: minutes from signup to first success event

- Repeat usage: percent of users coming back within 7 days

- AI correction rate: percent of outputs edited by users

- Model cost per active user: weekly model spend divided by weekly active users

If you do not have baseline numbers yet, treat them as hypotheses and set targets for the next sprint.

Real examples: what fast delivery looks like in practice

Speed is not about heroics. It is about constraints, clear decisions, and tight feedback.

MVP scope that ships

One month runway mindset

Aim: fewer unknowns, not fewer features. Include only what supports real users:

- Auth + password reset

- Simple org + roles (avoid enterprise permission grids)

- Billing + plans (even if you comp early)

- Audit trail for AI actions (who ran what, on which input)

- Admin support tools (impersonation, feature flags, usage view)

Defer what explodes scope: multiple integrations, custom analytics dashboards (start with events + spreadsheet), and agents that act without user review. Test: if one person cannot support it on a Friday night, it is not an MVP.

Miraflora Wagyu: premium ecommerce in 4 weeks

Miraflora Wagyu needed a high end Shopify experience and needed it fast. The team was spread across time zones, which made synchronous feedback hard. The way through was simple: keep the decision surface small and make asynchronous work the default.

What worked:

- Clear weekly milestones

- A narrow definition of “done” for each page and flow

- Fast review cycles in Slack, not meetings

Example: Miraflora Wagyu shipped a custom Shopify store in 4 weeks. The schedule forced ruthless prioritization and reduced “nice to have” debates.

blkbx: one click checkout as the wedge

blkbx came with a focused premise: let sellers generate payments for virtually any product through a seller account linked to Stripe, and let buyers purchase via social posts, ads, and QR codes.

That is a good MVP wedge. It is narrow, but it is valuable.

If you are building an AI powered SaaS, look for a wedge like this:

- One action users want to complete faster

- One place where errors are expensive

- One workflow where AI can assist but not fully automate yet

PetProov: trust features are product features

PetProov’s challenge was not just UX. It was trust: identity verification for breeders, buyers, and adopters, plus dashboards for multiple concurrent transactions.

The lesson for AI MVPs: when trust is central, it will dominate scope.

Insight: If users do not trust the output, they will not adopt the workflow. Accuracy is a product requirement, not a model metric.

Table: which example maps to your MVP risk

| Example | Primary risk | What reduced risk | What to copy |

|---|---|---|---|

| Miraflora Wagyu | Time and coordination | Tight milestones, async feedback | Weekly delivery slices |

| blkbx | Payments and conversion | Stripe linked flows, simple checkout | One wedge feature |

| PetProov | Trust and verification | Secure onboarding, clear dashboards | Auditability and UX clarity |

FAQ: questions founders ask before shipping

Should we build AI first or the workflow first? Workflow first. Then AI. Otherwise you cannot measure impact.

Do we need multiple models? Not for MVP. Start with one. Add a second only when you can prove a cost or quality benefit.

How do we talk about AI to investors without overselling? Show the workflow, then show the metric change. “Users complete X in Y minutes instead of Z.”

What if the model is wrong too often? Narrow the task, add review, and build an evaluation set. Do not hide the failures. Categorize them.

How this ties back to fundraising and investor relations

A boilerplate does not raise money. Evidence does.

Use your MVP to produce a simple investor update every two weeks:

- What you shipped

- What you measured

- What improved and what got worse

- What you will change next

If you do this consistently, investor conversations get easier. You are not pitching a story. You are reporting progress.

Conclusion

Building an AI powered SaaS MVP fast is mostly about removing avoidable work and shrinking uncertainty.

A SaaS boilerplate helps because it gives you the boring parts up front: auth, billing, roles, admin, deployment patterns. That buys you time to focus on the part that matters: the workflow and the AI feature.

Do not confuse speed with skipping rigor. AI systems need logging, evaluation, and fallbacks from day one.

Insight: Your MVP is not a smaller product. It is a smaller set of questions.

Actionable next steps you can take this week:

- Pick one workflow and write the success metric in one sentence

- Ship the workflow without AI, then add AI behind a feature flag

- Instrument every step, including model cost and latency

- Run 10 user sessions and track time to value

- Write a two week investor update with real numbers, even if they are small

If you do that, you will move faster and you will know why you are moving faster. That is what compounds.