Introduction

Multi tenant SaaS is already hard. Add AI workloads and it gets weird fast.

You now have two kinds of load:

- Product load: APIs, dashboards, background jobs, webhooks

- AI load: embeddings, vector search, batch labeling, agent runs, model fine tuning, and long running inference

The failure mode is predictable. One tenant sends a big PDF batch, or an agent gets stuck in a tool loop, and suddenly everyone else feels it. Latency climbs. Costs spike. Support tickets follow.

This article is about architecting multi tenant SaaS with AI workloads using Apptension’s SaaS Boilerplate as a starting point. Not as a magic box. As a practical baseline you can extend.

What we’ll focus on:

- Isolation: how to keep tenants from stepping on each other

- Scaling: what to autoscale, what not to autoscale, and why

- Cost control: how to stop AI spend from becoming a surprise

Insight: In AI heavy SaaS, “multi tenant” is not one decision. It’s a set of decisions you make per subsystem: auth, data, queues, caches, and model calls.

What we mean by Apptension’s SaaS Boilerplate

Think of the boilerplate as the foundation you do not want to rebuild every time:

- authentication and tenant aware access patterns

- baseline roles and permissions

- billing friendly primitives (plans, quotas, usage)

- a structure for background jobs and integrations

It does not decide your isolation model. It gives you a clean place to implement it.

Isolation decision starter

Use this in your first architecture review

Answer these as a team:

- What data must never cross tenants (including logs and embeddings)?

- What is the blast radius if one tenant floods the system?

- Which tenants require separate storage or compute for compliance?

- What is your rollback plan if a tenant isolation bug ships?

Write the answers down. Revisit them every quarter.

The hard parts: multi tenant plus AI makes new failure modes

Most teams underestimate how many ways AI can break multi tenant assumptions.

Common pain points we see:

- Noisy neighbor effects: one tenant’s AI jobs saturate CPU, queue workers, or vendor rate limits

- Data leakage risk: prompts and retrieval can accidentally cross tenant boundaries if you reuse indexes or caches

- Unbounded work: agents can keep calling tools, or chunking can explode token counts

- Cost opacity: you can’t control what you can’t measure, and AI spend is easy to hide inside “one API call”

- Latency cliffs: vector search and model calls have different scaling curves than your normal API

Insight: AI workloads are spiky and non linear. Your infra can look fine at 50 tenants and fall apart at 55.

What changes when you add retrieval and agents

Retrieval augmented generation and agent workflows add state.

You now store:

- embeddings

- chunk metadata

- conversation context

- tool outputs

And you run workflows that can take seconds or minutes. That pushes you toward asynchronous patterns, stronger idempotency, and better per tenant quotas.

The “it worked in staging” trap

Staging rarely has:

- realistic document sizes

- tenants with different usage patterns

- concurrent background jobs

So you ship, and the first enterprise tenant uploads a few thousand files.

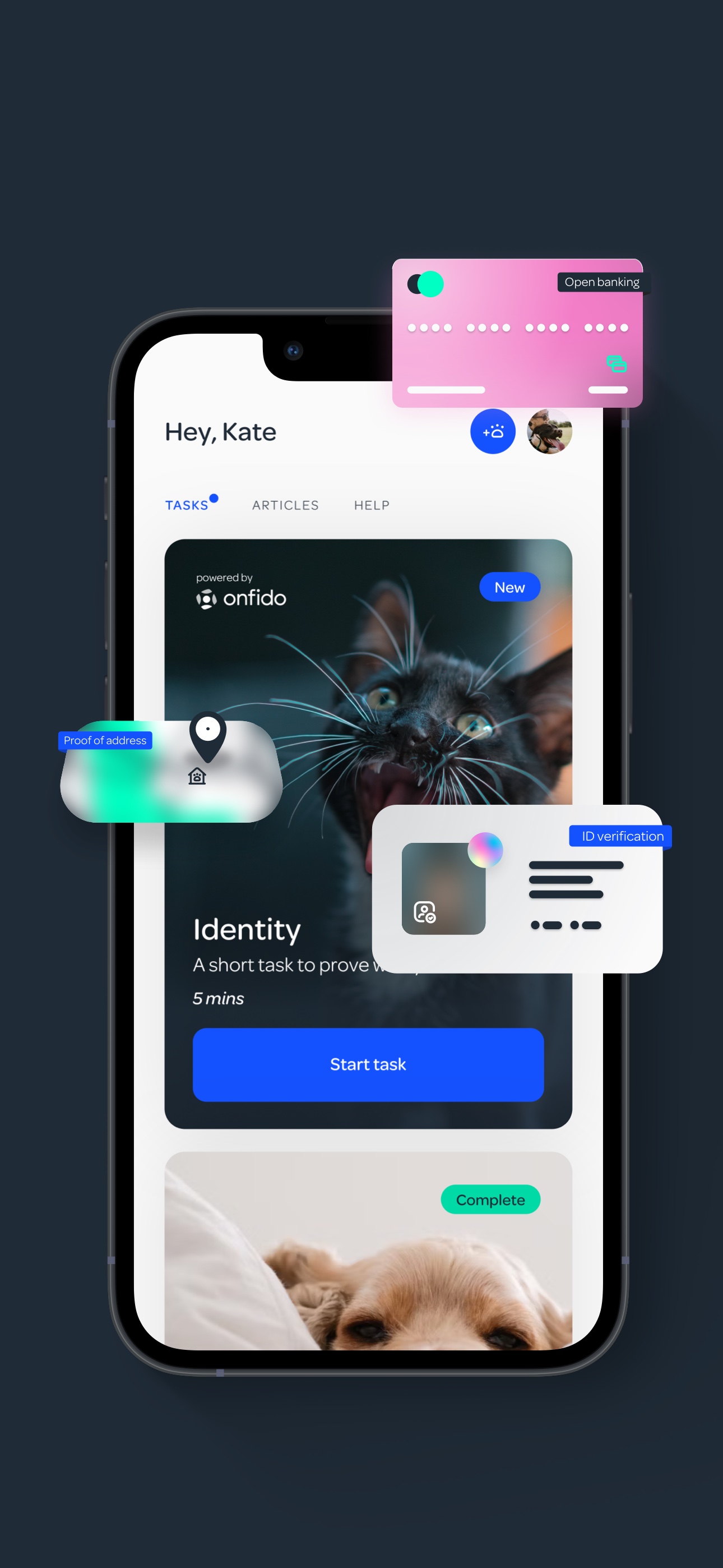

Example: In projects like PetProov, onboarding flows and verification steps are where trust is won or lost. The same applies to AI onboarding. If the first import or analysis job fails, users assume the product is unreliable.

A quick checklist of risks to model early

Use this list during architecture reviews:

- Can one tenant exhaust shared queues?

- Can one tenant hit vendor rate limits and block others?

- Can cached responses leak across tenants?

- Can a single request trigger unbounded model calls?

- Can you attribute every AI cost to a tenant and a feature?

_> What we anchor on in delivery

Concrete timelines and measurable constraints from real projects

Weeks to launch Miraflora Wagyu

Custom Shopify store delivery

Months to build PetProov

Secure onboarding and transaction flows

Users frustrated by poor personalization

A reminder that AI UX needs reliability

Isolation models that work in practice (and what they cost)

Isolation is not binary. It’s a slider.

You can isolate by:

- data

- compute

- network

- vendor accounts

- rate limits and quotas

Here’s a comparison table you can use to pick a baseline.

| Isolation choice | What you isolate | Pros | Cons | When it fits |

|---|---|---|---|---|

| Shared DB, tenant column | relational data | cheap, simple ops | higher blast radius, harder compliance | early stage, low compliance needs |

| Shared DB, separate schema | relational data | better separation, easier migrations per tenant | more complexity in tooling | mid stage SaaS with growing enterprise asks |

| Separate DB per tenant | relational data | strong isolation, easier data residency | higher ops cost, more connections | regulated or large enterprise tenants |

| Shared vector index with tenant filter | embeddings | cheapest, simplest | easy to get wrong, leakage risk | prototypes, internal tools |

| Separate vector index per tenant | embeddings | clear boundaries, simpler deletes | cost grows with tenants | B2B SaaS with meaningful AI usage |

| Separate worker pools per plan | compute | noisy neighbor control | more infra to manage | when AI jobs dominate |

Insight: If you can’t afford separate everything, isolate the parts that can leak data first: vector search, caches, and logs.

Tenant isolation in the data layer

A few patterns we’ve used successfully:

- Tenant scoped repositories: every query includes tenant id, enforced in one place

- Row level security: strong guardrails, but adds complexity to debugging

- Separate schemas for enterprise: a pragmatic hybrid

What fails:

- relying on developers to remember

WHERE tenant_id = ... - mixing admin tooling that bypasses tenant filters

Isolation for AI specific storage

AI adds new stores:

- vector database

- object storage for files

- prompt and run logs

Make tenant boundaries explicit:

- store embeddings with a tenant namespace, not just metadata

- store files under tenant prefixes in object storage

- encrypt sensitive fields if you operate in regulated spaces

Insight: “We filter by tenant id” is not isolation. It’s a promise. Isolation is when the system makes it hard to break the promise.

A pragmatic default we often start with

If you need a sane starting point:

- shared relational DB with strict tenant scoping and tests

- separate vector index per tenant (or per enterprise tenant)

- shared workers with per tenant rate limiting, then split pools later

Then you measure, and move the slider for the tenants who need it.

A simple cost control experiment

If you do not have baseline numbers yet

Run this for one week:

- Instrument token usage and job runtime for one AI feature.

- Add a per tenant daily budget that is high enough to not block normal use.

- Track:

- spend per tenant

- p95 job runtime

- queue wait time

- support tickets related to slowness

If you can’t produce a per tenant cost report after a week, fix attribution before shipping more AI features.

Scaling patterns for AI workloads without melting your core SaaS

Scaling AI workloads is less about Kubernetes tricks and more about workflow design.

Scale workers, not web

Keep core SaaS fast

AI workloads belong behind a job system. Keep sync paths (auth, CRUD, dashboards) separate from async paths (ingestion, chunking, embeddings, agent runs). If your web tier scales because embeddings are slow, you are scaling the wrong layer. Practical blueprint:

- Put AI behind jobs with

tenant_id, feature name, and a cost estimate. - Add rate limits per tenant and per plan. Hard caps beat surprise invoices.

- Split worker pools by workload type (ingestion vs inference vs scheduled batch).

What to measure (hypothesis): cost per job, retries per job type, and p95 time to result per tenant. Use it to tune quotas and worker counts.

You want to keep your core product fast while AI runs in the background.

Step by step: a scaling approach that does not punish everyone

- Separate synchronous from asynchronous paths

- sync: auth, CRUD, dashboards

- async: ingestion, chunking, embeddings, agent runs

- Put AI behind a job system

- every job has tenant id, feature name, and cost estimate

- Add rate limits per tenant and per plan

- hard caps beat surprise invoices

- Split worker pools by workload type

- ingestion workers

- inference workers

- scheduled batch workers

- Autoscale the right layer

- scale workers, not your whole web tier

Insight: If your web API scales because embeddings are slow, you’re scaling the wrong thing.

processSteps: AI workload separation blueprint

- Step 1: Tag every request and job with tenant id and feature

- Step 2: Push long work to queues, return a job id

- Step 3: Store intermediate artifacts (chunks, embeddings) with tenant namespaces

- Step 4: Enforce per tenant concurrency limits at the worker level

- Step 5: Expose progress and failure states in the UI

The vendor limit problem

Even if your infra scales, your model provider may not.

Mitigations:

- per tenant token budgets and request rate limits

- backoff and retry with jitter

- queue prioritization (interactive requests beat batch)

- optional: separate vendor accounts for high value tenants

A minimal job payload that supports multi tenant controls

{

"jobId": "uuid",

"tenantId": "t_123",

"userId": "u_456",

"feature": "document_ingestion",

"priority": "batch",

"inputs": {

"fileKey": "s3://bucket/t_123/files/f_789.pdf"

},

"limits": {

"maxTokens": 200000,

"maxToolCalls": 40,

"maxRuntimeSeconds": 900

}

}That limits block looks boring. It saves you.

Where Apptension’s SaaS Boilerplate helps

The boilerplate gives you a clean place to implement:

- tenant aware auth and request context

- plan and quota primitives

- background job structure

You still need to decide how strict you want to be with AI limits. But you do not start from a blank repo.

Cost control: make AI spend visible, bounded, and explainable

Cost control is not a finance problem. It’s an architecture problem.

Pick isolation per subsystem

Data, vector, compute, logs

Isolation is a slider. Don’t treat it as one global decision. If you can’t afford full separation, isolate the parts that can leak data first: vector search, caches, and logs. What works in practice:

- Enforce tenant scoping in one place (tenant scoped repositories or row level security). Don’t rely on developers remembering

WHERE tenant_id = .... - For embeddings and files: use tenant namespaces in the vector store and tenant prefixes in object storage.

Tradeoff to surface: separate vector indexes per tenant reduce leakage risk and simplify deletes, but cost scales with tenant count. Measure index size and query volume per tenant before committing.

If you want predictable margins, you need three things:

- attribution (who spent it)

- budgets (how much they can spend)

- controls (what happens when they hit the budget)

Key Stat: 76% of consumers get frustrated when organizations fail to deliver personalized interactions. Personalization often means more AI calls. That frustration can turn into churn if your AI features get throttled without explanation.

What to measure (and how to store it)

If you don’t have numbers yet, treat this as a hypothesis and instrument it.

Track per tenant, per feature, per day:

- model calls

- input tokens and output tokens

- embedding tokens

- vector queries

- queue wait time and job runtime

- cache hit rate for retrieval

Store usage as immutable events. Aggregate later.

benefits: cost control levers that actually work

- Hard budgets: stop jobs when the tenant hits a daily or monthly cap

- Soft budgets: degrade gracefully (smaller context, cheaper model) instead of failing

- Feature level quotas: cap expensive flows like bulk ingestion separately from chat

- Plan based defaults: different concurrency limits per plan

- Explainability: show users what happened and what to do next

Common cost traps (and mitigations)

- Trap: embedding the same document repeatedly

- Fix: content hashing and deduping

- Trap: chunking that produces 10x more chunks than expected

- Fix: chunk size guardrails and file type specific parsers

- Trap: agent tool loops

- Fix: max tool calls, max runtime, and tool call tracing

Insight: If you can’t explain a tenant’s AI bill in one screen, you will end up discounting invoices.

A simple budget policy that is easy to enforce

- Free plan: small monthly token budget, strict concurrency limit

- Pro: higher budget, soft throttling after threshold

- Enterprise: negotiated budget, optional dedicated workers and vendor accounts

You can represent this in config and enforce it in one place, ideally at the queue consumer.

A cost control UI that reduces support tickets

We’ve seen fewer “why is this slow” tickets when the product shows:

- current usage vs plan

- what is queued vs running

- why a job failed (budget hit, file too large, vendor limit)

It’s not fancy. It’s honest.

Guardrails for agents and long runs

If you ship agents or tool calling, enforce:

- max runtime per job

- max tool calls

- max tokens per run

- tool allowlist per feature

- trace logs stored with tenant id

These limits are not pessimism. They are how you keep one tenant from taking down everyone else.

Examples from Apptension delivery: what transfers to AI SaaS

The case studies below are not AI products. But the delivery lessons map directly to multi tenant AI systems.

AI breaks multi tenant

New failure modes to plan for

Common ways teams get surprised:

- Noisy neighbor: one tenant’s PDF batch or agent loop saturates CPU, workers, or vendor rate limits.

- Leakage risk: prompts, retrieval, and caches can cross tenant boundaries if indexes are shared or filters are inconsistent.

- Unbounded work: chunking explosions, tool loops, and long runs turn “one request” into thousands of tokens and calls.

What to do next (measurable): tag every request and job with tenant_id, workload type, and estimated cost. Track p95 latency, queue depth, and cost per tenant. If things look fine at 50 tenants, treat 55 as a load test target, not a surprise.

Miraflora Wagyu: shipping fast without breaking quality

Miraflora Wagyu needed a premium Shopify experience in 4 weeks. The constraint was time and async communication across time zones.

What transfers to AI SaaS:

- tight scope control

- clear ownership of integration points

- a bias toward shipping an end to end slice, then iterating

Example: The timeline pressure forced disciplined decisions. In AI SaaS, the same discipline helps you avoid shipping five half working AI features that you can’t operate.

PetProov: trust and verification flows

PetProov was built in 6 months with a strong focus on secure onboarding and identity verification.

What transfers:

- explicit state machines for long running workflows

- audit friendly logs

- UX that explains what is happening

In AI workloads, your “verification flow” is often ingestion and analysis. Users need progress, retries, and clear errors.

blkbx: payments and one click flows

blkbx focused on reducing friction with a simple checkout flow tied to Stripe.

What transfers:

- usage based billing patterns

- strong idempotency (payments teach this fast)

- careful handling of edge cases

Insight: If you can build payment flows without double charging, you can build AI job processing without double embedding.

featuresGrid: what we build into the architecture early

- Tenant aware request context and authorization

- Usage events for every model call and embedding run

- Job based AI workflows with retries and idempotency keys

- Per tenant rate limits and concurrency caps

- Separate storage namespaces for files and embeddings

A note on emergent behavior and why it matters operationally

In our internal R and D work on LLM agents for exploratory data analysis (Project LEDA), we saw a pattern: once you give an agent tools, it will surprise you.

Not always in a good way.

Operationally, that means you should assume:

- longer tails in runtime

- occasional tool misuse

- higher variance in token usage

So you design guardrails first, then you let the agent roam.

Conclusion

Multi tenant SaaS with AI workloads is mostly about discipline.

Not more services. Not more dashboards. Just clear boundaries, clear limits, and numbers you trust.

If you’re building on Apptension’s SaaS Boilerplate, you can move faster on the boring parts and spend your time on the decisions that matter: isolation, scaling, and cost control.

Actionable next steps:

- Pick an isolation baseline for relational data and vector data. Write it down.

- Make AI async by default. Add job ids, progress, and retries.

- Instrument usage events per tenant and per feature. No exceptions.

- Set budgets and limits early. Start strict. Loosen later.

- Run a noisy neighbor test: one tenant uploads 100 large files. Measure impact on others.

Insight: The best multi tenant AI system is the one where you can answer two questions fast: “Who is affected?” and “How much did it cost?”

faq: questions we hear from teams building this

Do we need a database per tenant?

- Not always. Start with shared DB plus strict tenant scoping. Move enterprise tenants to separate DBs when compliance or blast radius demands it.

Should we share one vector index across tenants?

- It can work, but it is easy to get wrong. If your product handles sensitive data, separate indexes per tenant is the safer default.

What is the first cost control feature to ship?

- Usage attribution. If you can’t tie spend to tenant and feature, budgets are guesswork.

When do we split worker pools?

- When AI jobs start affecting core API latency, or when you see queue times for interactive requests increase during batch workloads.