Introduction

Most legacy SaaS products don’t need a rewrite. They need a way to ship new capabilities without breaking billing, auth, reporting, or the weird edge cases that keep revenue stable.

AI features add a twist: you’re not just changing code. You’re changing behavior. Outputs can drift. Costs can spike. Security reviews get harder.

A boilerplate approach helps because it forces decisions early. Not the fun ones. The boring ones that prevent a two month prototype from turning into a two year migration.

Here’s the frame we use: treat AI as a new product surface, not a sidecar script. Ship it behind flags. Measure it like you measure payments.

What this article covers:

- How to pick a migration strategy that doesn’t stall delivery

- How to use a boilerplate to standardize AI integration work

- How to roll out incrementally with clear kill switches

- What tends to fail, and how to reduce the blast radius

Insight: The fastest AI rollout is usually the one with the best rollback plan.

What we mean by boilerplate (and what we don’t)

Boilerplate is not a template that makes decisions for you. It’s a starter kit that makes the right decisions hard to avoid.

In practice, a good AI modernization boilerplate includes:

- Standard service boundaries and folder structure

- Auth, permissions, audit logs, and rate limiting patterns

- Observability defaults (traces, structured logs, cost metrics)

- Feature flags and gradual rollout hooks

- Evaluation harnesses (golden datasets, regression tests)

It should also include the stuff teams forget until production:

- Prompt versioning and change logs

- Data retention rules

- A safe fallback path when the model fails

When this approach is the right fit

This approach works best when:

- You have a working SaaS with paying users

- You can’t pause roadmap delivery for a rewrite

- You need AI features but also need compliance and predictability

It’s a weaker fit when:

- The product is pre product market fit and still changing weekly

- Your data is too messy to support AI outputs yet (no labels, no ground truth)

- You can’t instrument usage or outcomes (you’ll be flying blind)

Key Stat: 76% of consumers get frustrated when organizations fail to deliver personalized interactions.

If you’re adding AI to improve personalization, you need to measure whether it actually reduces that frustration. Otherwise you’re just adding cost.

First slice ideas that usually behave well

- Drafting internal notes from structured records (with human review)

- Summarizing long support threads into a proposed resolution

- Classifying inbound requests into existing categories

- Suggesting next best actions in admin tools, not customer facing UI

Avoid as a first slice:

- Automated refunds, cancellations, or billing changes

- Anything that changes permissions

- Anything that sends outbound messages without review

Understand the legacy SaaS constraints before you add AI

Legacy SaaS isn’t “old code”. It’s code that already has contracts with customers, internal teams, and integrations.

The AI work will fail if you don’t map those contracts first.

Common constraints we see:

- Hidden coupling: one change in onboarding breaks invoicing

- Data gravity: the data you need lives in five systems and two spreadsheets

- Permission complexity: roles evolved over years and nobody trusts the docs

- Performance ceilings: your app is already close to its latency budget

- Compliance drift: “we’re GDPR compliant” means three different things internally

Insight: If your SaaS can’t explain why a number is on a dashboard, it can’t safely explain why an AI output is correct.

Quick diagnostic checklist

Run this before writing a single prompt:

- List the top 3 workflows where AI could remove manual work.

- For each workflow, write down:

- Input data sources

- Output destination (UI, API, email, exports)

- Who can see it (roles)

- What happens when it’s wrong

- Identify one “safe failure” behavior.

If you can’t answer those, the first sprint is discovery and instrumentation, not model selection.

Visual component: featuresGrid

featuresGrid: Legacy readiness signals

- Stable domain model: core entities don’t change weekly

- Event logs exist: you can reconstruct what users did

- Support tags are usable: you can group issues by theme

- Clear ownership: someone owns data quality, not just pipelines

- Known latency budget: you know what “too slow” means

What we learned from retail builds (and why it matters for SaaS)

In retail projects like blkbx, the product promise was simple: create a simple checkout flow that works across social, ads, and QR codes. That sounds small until you hit the real constraints: payments, retries, fraud, and edge cases.

AI modernization feels similar. The first demo looks easy. Production is where the contracts show up.

In Miraflora Wagyu, the constraint was time and async communication across time zones. The lesson is not “move faster”. It’s this: if feedback loops are slow, your rollout plan needs more guardrails, not fewer.

So for legacy SaaS: assume you will have slow feedback at some point. Build the system so it can survive that.

Where AI usually creates new risk

AI tends to add risk in places legacy systems are already weak:

- Ambiguous requirements: “make it smarter” is not a spec

- Non deterministic behavior: the same input can produce different output

- Cost variability: usage spikes can turn into a surprise bill

- Data handling: logging for debugging can become a privacy incident

Mitigation starts with naming the risk. Then you design around it:

- Put AI outputs behind a review step when correctness matters

- Add hard limits: tokens, retries, concurrency

- Log metadata, not raw sensitive text, unless you have a policy and a reason

Insight: A legacy SaaS can tolerate occasional downtime. It can’t tolerate silent wrongness.

Risk register starter

Use this before the first production rollout

- Silent wrong output: Add user feedback, acceptance tracking, and review queues for risky cases.

- Cost spikes: Add token limits, per tenant budgets, and alerts on cost per outcome.

- Data leakage: Redact at the gateway, scope context per tenant, and keep audit logs.

- Latency regressions: Set timeouts, cache where safe, and degrade gracefully.

- Model drift: Lock prompt versions, run regression evals, and document changes.

Choose a migration strategy that supports incremental rollout

There are three practical ways to modernize a legacy SaaS with AI. Two are common. One is survivable.

Pick a survivable migration

Ship in slices or stall

Three paths show up in practice:

- Big rewrite: clean slate, but long freeze and hard validation. Use only when the old system is collapsing.

- Sidecar AI: fast pilots and isolation, but often duplicates auth, logging, and becomes a messy dependency.

- Strangler with boilerplate: replace flows gradually with reusable patterns. More discipline upfront, less chaos later.

Rule of thumb: if you cannot ship in slices, you will ship diagrams. In our retail builds (blkbx checkout and Miraflora Wagyu), the first demo was easy; production was payments, retries, fraud, and slow feedback loops. For SaaS, assume feedback will be slow sometimes and design rollout with flags, metrics, and rollback from day one.

The three paths (and what they cost you)

| Strategy | What it looks like | Pros | Cons | When it fits |

|---|---|---|---|---|

| Big rewrite | New platform plus AI features | Clean slate | Long freeze, high risk, hard to validate | Rarely, when the old system is collapsing |

| Sidecar AI | Separate AI service called by legacy app | Fast to pilot, isolated | Can become a messy dependency, duplicated auth and logging | Early experiments, low risk workflows |

| Strangler with boilerplate | New AI enabled slices replace old flows gradually | Controlled rollout, reusable patterns | Requires discipline and instrumentation | Most legacy SaaS with revenue |

Insight: If you can’t ship in slices, you will ship nothing but architecture diagrams.

What “boilerplate first” changes

A boilerplate approach forces you to standardize the parts that otherwise get reinvented per feature:

- How you call the model (SDK wrapper, retries, timeouts)

- How you store prompts and versions

- How you capture evaluations

- How you expose features behind flags

- How you track cost per tenant

That standardization is what makes incremental rollout possible.

Visual component: processSteps

processSteps: Picking the first AI slice

- Pick a workflow with clear inputs and a reversible output.

- Define the fallback behavior (existing logic, human review, or no output).

- Add instrumentation before shipping (latency, cost, acceptance).

- Ship to internal users first.

- Expand by tenant, not by percentage, if your customers vary widely.

Example: conversational data analysis as a product surface

In our internal work on Project LEDA (LLM driven exploratory data analysis), the core lesson was about boundaries. A conversational interface can look like “just chat”. Under the hood, it needs controlled tools, data access rules, and a way to reproduce results.

Legacy SaaS teams often skip those boundaries early, then scramble later when a customer asks: “Why did it say that?”

If you’re building AI analysis features, bake in:

- Query logs and tool call traces

- Dataset scoping per tenant

- Output citations (where the answer came from)

- A way to replay a session with the same prompt version

A simple decision rule for strategy selection

Use this rule of thumb:

- If the feature can be wrong sometimes, start with sidecar AI.

- If the feature affects money, compliance, or user permissions, use strangler with boilerplate.

- If you’re considering a rewrite, first prove the AI feature value with a slice. Then decide.

What to measure during the first slice (hypotheses if you don’t have baselines yet):

- Adoption: % of eligible users who try the feature

- Task time: median time to complete the workflow

- Quality: acceptance rate or edit distance vs human output

- Support load: new tickets per 1000 uses

- Cost: model cost per successful outcome

Key Stat (hypothesis to validate): If the AI feature reduces task time by 20% without increasing support tickets, it’s usually worth scaling. Validate it with your own data.

_> What to track in the first 30 days

If you can’t measure it, you can’t roll it out safely

Task time reduction target

Validate per workflow

P95 latency budget

Milliseconds per request

Acceptance rate goal

Accepted or lightly edited

Build the boilerplate: the boring parts that keep you safe

If you only build one thing before shipping AI features, build the boilerplate layer. It’s the difference between “we shipped a feature” and “we can ship ten more without panic”.

Legacy constraints checklist

Map contracts before prompts

Legacy SaaS breaks in hidden places. Before model selection, run a quick diagnostic:

- Pick 3 workflows where AI could remove manual work.

- For each: list inputs, output destination (UI, API, email, exports), roles, and what happens when it’s wrong.

- Define one safe failure behavior (fallback copy, human review queue, or “no result” state).

Watch for common traps: hidden coupling (onboarding breaks invoicing), data gravity (data spread across systems), permission complexity, and latency ceilings. If you cannot explain why a dashboard number exists, treat explainability for AI outputs as a risk, not a feature.

What we include in an AI modernization boilerplate

Minimum set:

- AI gateway service: one place to call models, enforce timeouts, and track cost

- Prompt registry: prompts stored with versions, owners, and change notes

- Evaluation harness: golden datasets, regression tests, and score thresholds

- Feature flags: tenant level enablement, staged rollout, kill switch

- Observability: traces across app and AI gateway, structured logs, dashboards

Nice to have (usually becomes mandatory later):

- Policy checks: PII detection, forbidden topics, output filters

- Human in the loop: review queues for high risk outputs

- Data minimization: redaction before sending text to a model

Insight: Most AI incidents are not model failures. They’re logging, permissions, or missing limits.

Code example: a thin AI gateway wrapper

This is intentionally boring. That’s the point.

# ai_gateway.py

from dataclasses import dataclass

import time

@dataclass

class AIResult:

text: str

model: str

prompt_version: str

latency_ms: int

tokens_in: int

tokens_out: int

blocked: bool

error: str | None

def generate_summary(client, prompt, prompt_version, user_context, limits):

start = time.time()

redacted = redact_pii(prompt)

if not policy_allows(user_context, redacted):

return AIResult(

text="",

model="",

prompt_version=prompt_version,

latency_ms=int((time.time() - start) * 1000),

tokens_in=0,

tokens_out=0,

blocked=True,

error=None,

)

try:

resp = client.responses.create(

model=limits.model,

input=redacted,

timeout=limits.timeout_seconds,

max_output_tokens=limits.max_output_tokens,

)

return AIResult(

text=resp.output_text,

model=limits.model,

prompt_version=prompt_version,

latency_ms=int((time.time() - start) * 1000),

tokens_in=resp.usage.input_tokens,

tokens_out=resp.usage.output_tokens,

blocked=False,

error=None,

)

except Exception as e:

return AIResult(

text="",

model=limits.model,

prompt_version=prompt_version,

latency_ms=int((time.time() - start) * 1000),

tokens_in=0,

tokens_out=0,

blocked=False,

error=str(e),

)

You can wire this into any legacy stack because the interface is stable. That stability is what lets you modernize in slices.

Visual component: benefits

benefits: What boilerplate buys you in week 2

- Faster second feature (because auth, logging, and flags already exist)

- Safer experiments (because you can limit tenants and roll back)

- Cleaner audits (because you can show prompt versions and access logs)

- Predictable costs (because you can cap tokens and concurrency)

Where teams overbuild

Two traps show up a lot:

- Building an internal prompt editor before you have prompt owners

- Building a complex agent framework before you have one stable use case

Start smaller:

- Store prompt templates in code with version tags

- Add a lightweight registry later when ownership and review process exist

Insight: A prompt registry without ownership is just a place to hide broken prompts.

Security and compliance: practical defaults

If you work in regulated industries, you can’t treat AI like a toy. But you also can’t treat it like a full rewrite.

Practical defaults we’ve seen work:

- Zero trust mindset: every AI call is authenticated and authorized

- Tenant isolation: never let the model see cross tenant context

- Audit logs: who requested output, what prompt version, what tools were used

- Retention rules: decide what you store and for how long

If you’re using third party models:

- Document where data goes

- Decide whether you can log raw prompts

- Add redaction at the gateway, not in each feature

What to measure:

- Policy blocks per 1000 requests

- Incidents caused by mis scoped permissions

- Time to produce an audit trail for one customer request

Roll out incrementally: flags, canaries, and human review

Incremental rollout is not a nice to have. It’s the only way to learn without burning trust.

Boilerplate means guardrails

Standardize the boring parts

Boilerplate is a starter kit that makes bad defaults hard to ship. Use it to lock in:

- Auth, permissions, audit logs, rate limits (so AI does not bypass existing controls)

- Observability by default: traces, structured logs, and cost per request metrics

- Feature flags + gradual rollout hooks with a clear kill switch

- Evaluation harness: golden datasets, regression tests, and prompt versioning

What fails without this: every AI feature invents its own wrapper, logging, and fallback. You get inconsistent behavior, higher support load, and no clean rollback when outputs drift.

A rollout plan that fits legacy SaaS reality

Use a staged plan. Keep it boring.

- Internal dogfood: support and ops use it first

- Friendly tenants: pick customers who tolerate change and give feedback

- Tenant cohort rollout: expand by similar usage patterns

- Default on for new tenants: only after you have stable metrics

In every stage, define:

- A success metric

- A failure metric

- A rollback trigger

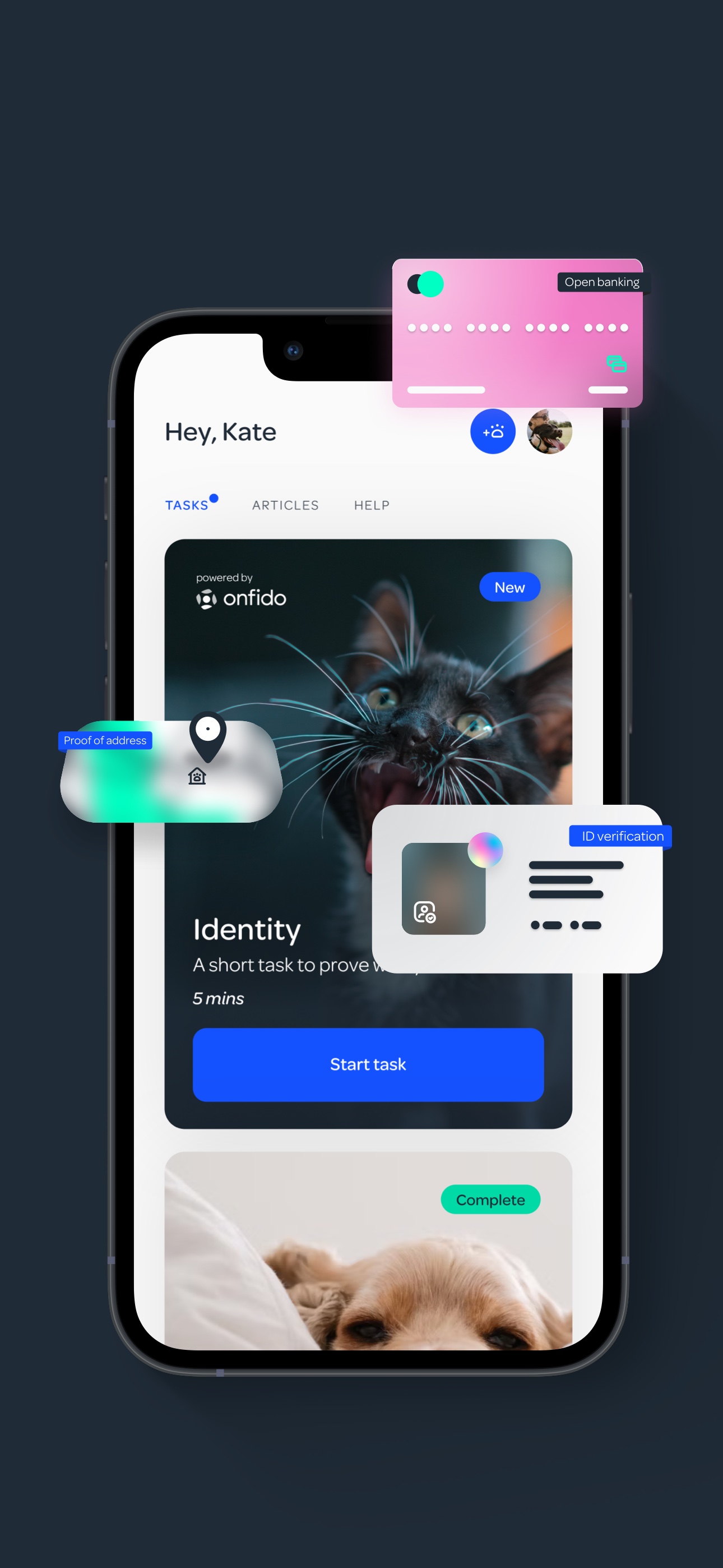

Example: In PetProov, the product depended on trust and identity verification. When trust is the product, you design flows so a user can recover from uncertainty. AI features need the same mindset: clear fallbacks and review paths.

Human in the loop without slowing everything down

Human review does not mean every output needs approval. It means you route the risky ones.

Common rules:

- Review when confidence is low

- Review when output affects money or compliance

- Review when the user is new or unverified

You can implement this with:

- A queue in your admin panel

- A “needs review” status on the record

- A diff view: AI suggestion vs final decision

Visual component: faq

faq: Incremental AI rollout questions

Should we A B test AI features? Sometimes. For workflows with clear outcomes, yes. For trust sensitive workflows, tenant based rollout is safer than random user splits.

What if the model gets worse over time? Assume it will. Lock prompt versions. Track quality metrics. Re run evaluations when you change models or prompts.

Do we need microservices first? Not always. You need clear boundaries. A sidecar AI gateway can work even if the core app is monolithic.

How do we keep costs predictable? Put budgets in code: token limits, rate limits, and per tenant caps. Track cost per successful outcome, not per request.

Key Stat (hypothesis to validate): In many SaaS products, 5% of tenants generate 50% of usage. If that’s true for you, per tenant cost caps matter more than global caps.

What to instrument on day one

If you don’t measure it, you will argue about it.

Track these for every AI call:

- Tenant id, user id, feature name

- Prompt version, model name

- Latency, error rate, retries

- Tokens in, tokens out, estimated cost

- User action after output (accepted, edited, ignored)

Then build two dashboards:

- Product dashboard: adoption, acceptance, task time

- Ops dashboard: latency, errors, cost, policy blocks

Handling slow feedback loops (time zones, stakeholders, support)

Miraflora Wagyu was delivered in 4 weeks with a team spread across time zones. The constraint was feedback speed. That constraint shows up in SaaS too, especially in enterprise accounts.

When feedback is slow:

- Prefer tenant cohort rollouts over fast percentage rollouts

- Use longer canary windows (days, not hours)

- Write down rollback criteria in advance

Small habit that helps: after every rollout stage, write a one page change log:

- What changed

- What we expected

- What we saw (numbers)

- What we’ll change next

It sounds basic. It prevents memory based decision making.

Incremental rollout checklist

A simple script for each stage

Before enabling a cohort:

- Define success and failure metrics.

- Confirm kill switch works.

- Confirm fallback path works.

- Confirm logs include tenant id, prompt version, and cost.

- Confirm support knows what changed and how to report issues.

After 48 to 72 hours:

- Review adoption, acceptance, and ticket volume.

- Compare cost per outcome vs baseline.

- Decide: expand, hold, or roll back.

Conclusion

Modernizing a legacy SaaS with AI is mostly about constraints. The model is the easy part. The hard part is shipping behavior changes in a system that already makes money.

A boilerplate approach keeps you honest. It forces you to build the guardrails once, then reuse them. That’s how you migrate without stalling the roadmap.

Next steps you can take this week:

- Pick one workflow where AI output is reversible

- Define the fallback path in writing

- Build a thin AI gateway with logging, limits, and prompt versioning

- Ship behind tenant level flags

- Measure adoption, acceptance, cost per outcome, and support load

If you do nothing else, do this:

- Add a kill switch

- Log prompt versions

- Track cost per tenant

Insight: The goal is not to add AI. The goal is to add a capability you can operate, explain, and roll back.

When that’s true, you can modernize in slices. And you can keep shipping while you do it.