The journey began with a thorough analysis of the company's current knowledge management system and employee query patterns. Our dedicated team consisted of Generative AI specialists, data engineers, and UX designers, supported by a project manager. With a twelve-week timeline, we set out to create a functional prototype capable of answering questions based on the company's internal documentation.

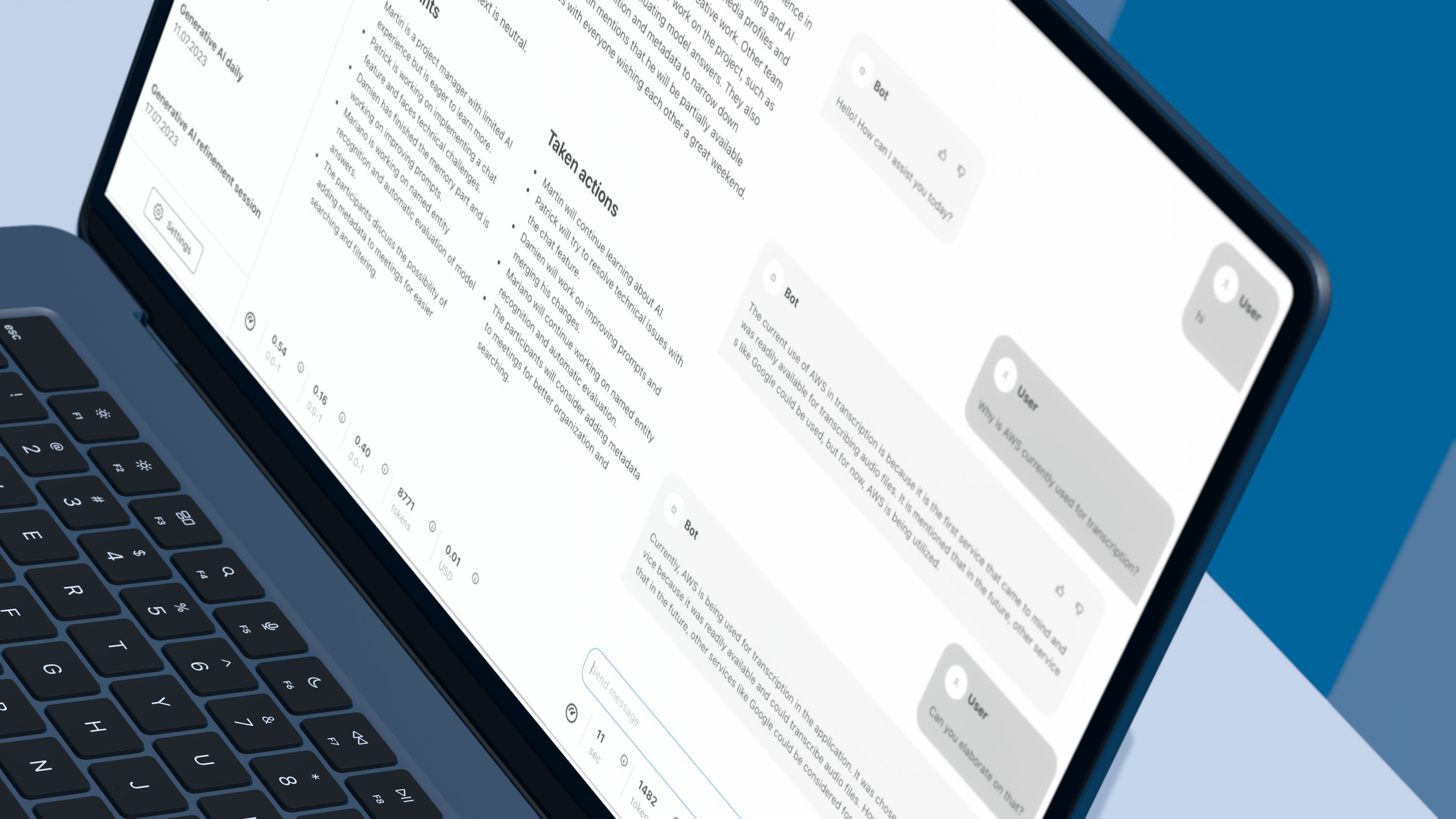

From the outset, our specialists focused on implementing a Retrieval-Augmented Generation (RAG) system. This approach allowed Mobegí to pull relevant information from the company's knowledge base and generate contextually appropriate responses. The team continuously refined the retrieval and generation processes, balancing accuracy with response speed.

One innovative strategy developed by our team involved a multi-pass anonymization process. This ensured that sensitive information was protected during processing while maintaining the context necessary for accurate responses. The system was designed to anonymize input queries, process them, and then de-anonymize the responses, all in real-time.

Meanwhile, our data engineers tackled the challenge of efficiently indexing and searching the company's documentation. They implemented a sophisticated chunking strategy and explored various embedding techniques to optimise retrieval accuracy. This involved careful consideration of document structure, content relevance, and query patterns.

The UX design team played a crucial role in making Mobegí approachable and easy to use. They integrated the chatbot into the company's existing Slack workspace, allowing employees to interact with Mobegí through a familiar interface. This design choice significantly lowered the adoption barrier and encouraged regular use.